Why

-

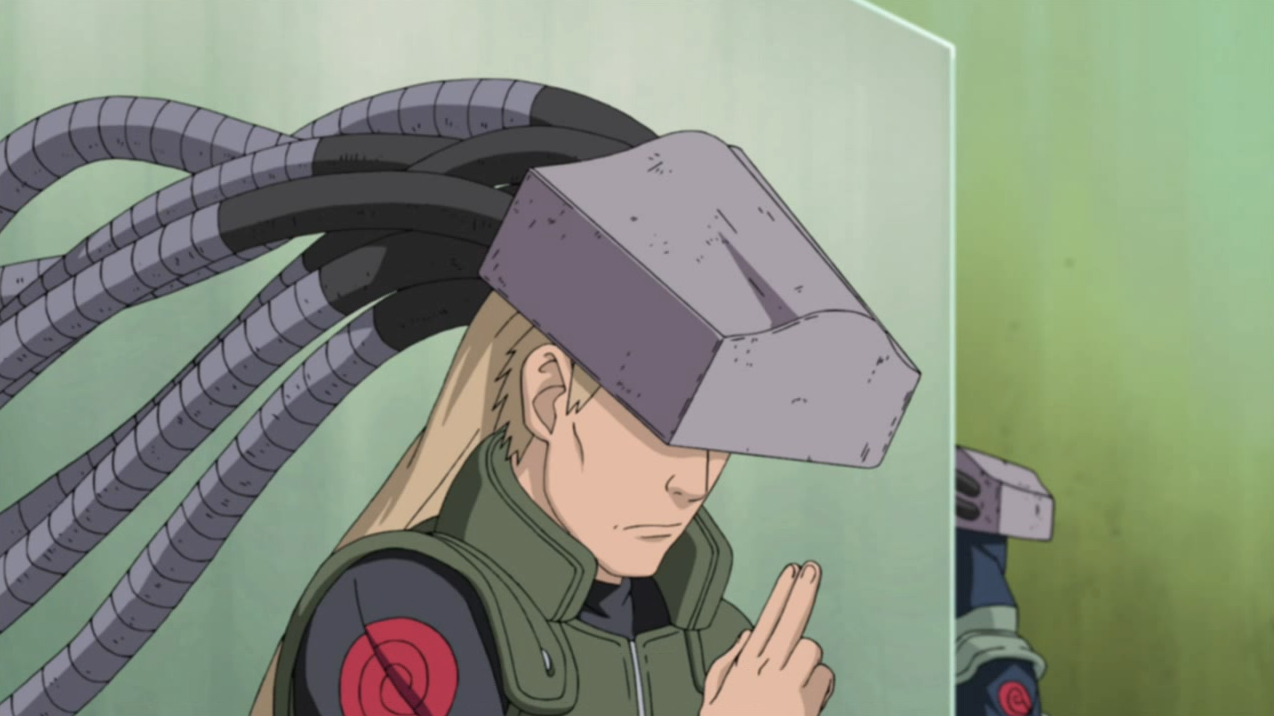

If you can’t speak in a classroom, venue, etc., you want to interact without speaking out

-

Tell the story without pronunciation

Background

-

Researchers at Oxford University and Google DeepMind have developed artificial intelligence (AI) that reads the movement of a person’s lips through training with thousands of hours of content broadcast by the BBC.

-

Little research on facial muscle activity

-

Difficult to discriminate micro electromyographic activity

-

Obtained action potential is a continuous waveform that fluctuates in time and is difficult to analyze usually.

How

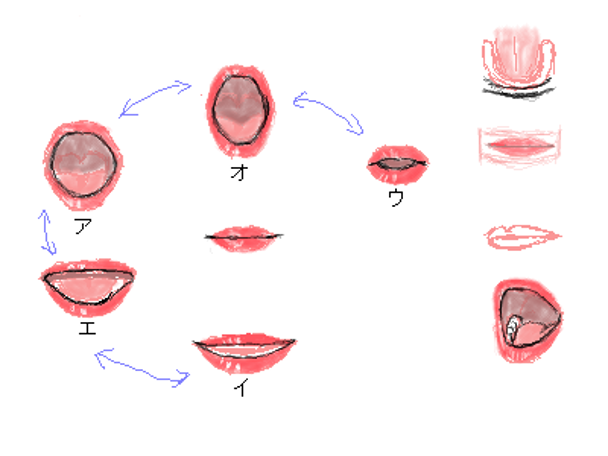

Different mouth shape when speaking different vowels

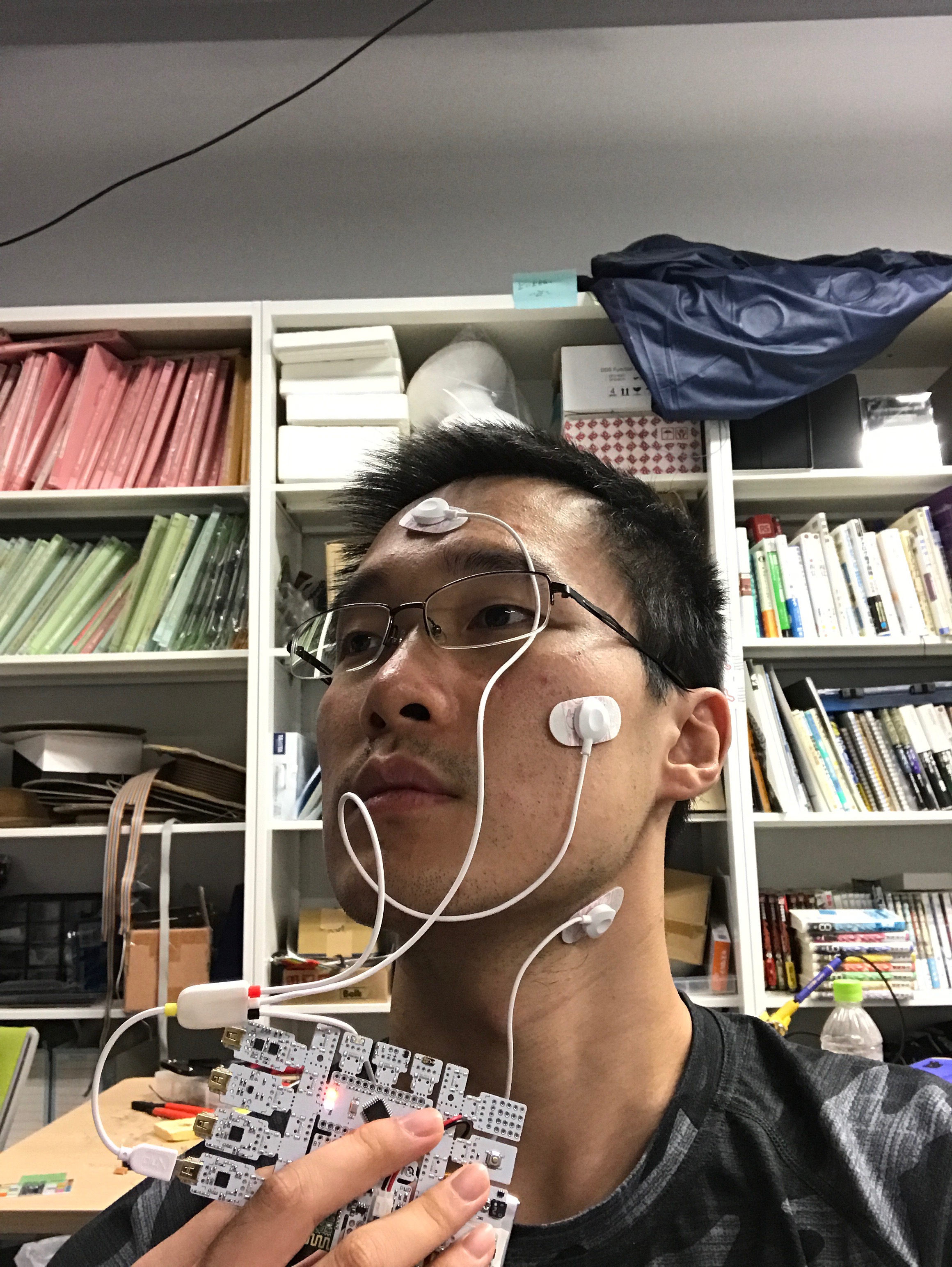

By collecting masticatory EMG data when speaking different vowels(chin–plus,cheek–minus,forehead–reference)

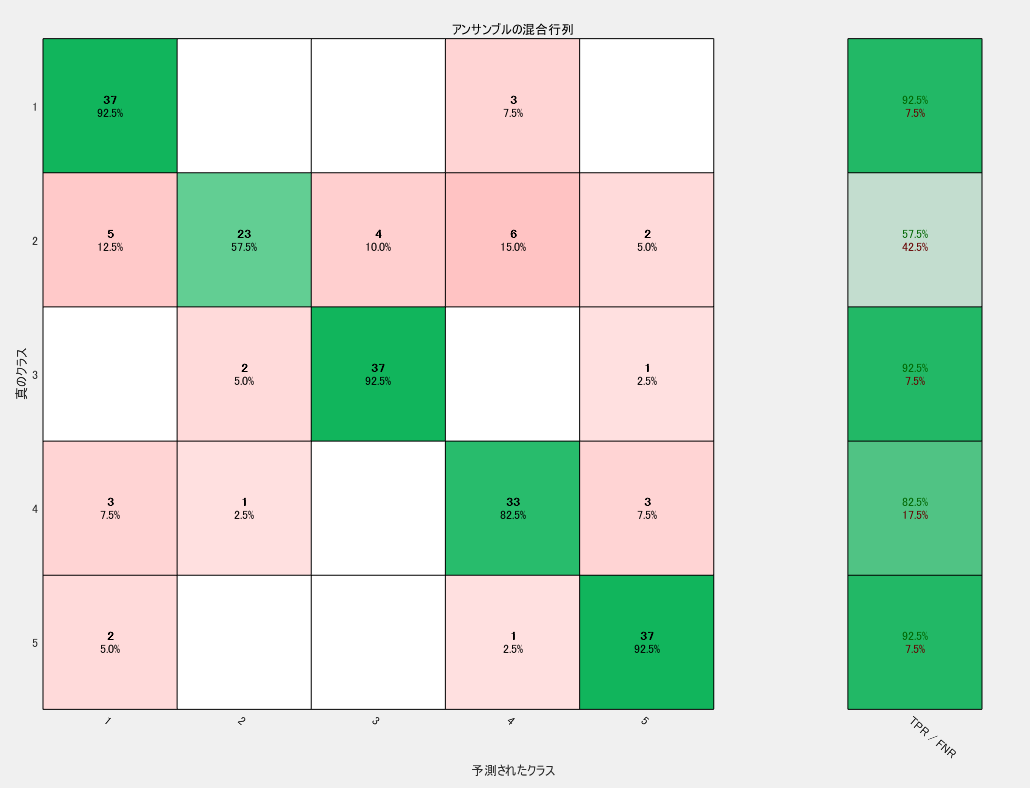

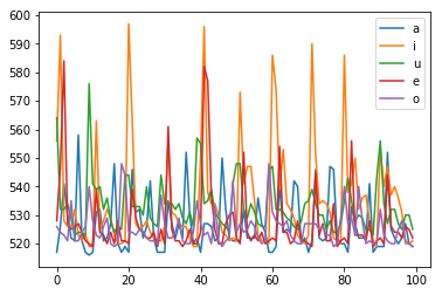

Result

-

I analyzed the EMG data by analyzing envelope of different signals, different signals can be easily distinguished

-

By using a simple neural network from MATLAB, different EMG signals of different vowels can be correctly distinguished.